biography

Azumi Maekawa(前川和純)is a project lecturer in the Research Center for Advanced Science and Technology at the University of Tokyo, Japan.

He received his Ph.D. for his research on the design of human-machine cooperative systems in 2021.

The focus of his work is on boundaries between living things and artifacts. He is involved in interdisciplinary projects across various fields such as robotics, cognitive psychology, computer science and human-computer interaction.

His work has been presentedin various exhibitions and conferences including Ars Electronica Festival, ACM SIGGRAPH, and IEEE IROS.

His major awards include ACM CHI Honorable Mention Award (Top 5%) and The 23rd Japan Media Arts Festival Entertainment Division New Face Award.

2021

Ph.D. in Engineering, The University of Tokyo

2018

M.S. in Engineering (Mechanical Engineering), The University of Tokyo

2016

B.Sc. in Engineering (Mechanical Engineering), The University of Tokyo

works

Paradox of the Mass

This work consists of a stone, a motor-driven propeller, and an intricately crafted pivot that supports them. Through precise mechanical design and careful adjustment of the center of gravity, it achieves gentle movement of the stone with minimal force. By essentially deconstructing the inherent attribute of mass that matter possesses, it offers a novel visual experience derived from the familiar presence of stone.

exhibition:

-

Mugen Room by Air Max DN8

Paradox of the Mass

Ryota Kito + Azumi Maekawa

Sankei-Shimbun Koto Center, Tokyo, Japan

2025

close

Redirected Remapping

This study investigates how both the body part used to control a VR avatar and the avatar's appearance affect redirection detection thresholds. We conducted experiments comparing hand and foot manipulation of two types of avatars: a hand-shaped avatar and an abstract spherical avatar. Our results show that, irrespective of the body part used, the redirection detection threshold increased by 21% when using the hand avatar compared to the abstract avatar. Additionally, when the avatar's position was redirected toward the body midline, the detection threshold increased by 49% compared to redirection away from the midline. No significant differences in detection thresholds were observed between the hand and foot manipulations. These findings suggest that avatar appearance and redirection direction significantly influence user perception in VR environments, offering valuable insights for the design of full-body VR interactions and human augmentation systems.

publication:

-

Ryutaro Watanabe*, Azumi Maekawa*, Michiteru Kitazaki, Yasuaki Monnai, Masahiko Inami."Redirection Detection Thresholds for Avatar Manipulation with Different Body Parts." IEEE Transactions on Visualization and Computer Graphics (2025). (* These authors contributed equally.)

[pdf] [doi]

close

Stone Contours in Light

A work that visualizes and amplifies the natural undulations and irregularities of a stone through reflected light. In contrast to homogenized and standardized artificial objects, the stone's inherently amorphous form influences the reflection of light, creating a unique rhythm and landscape within the space.

close

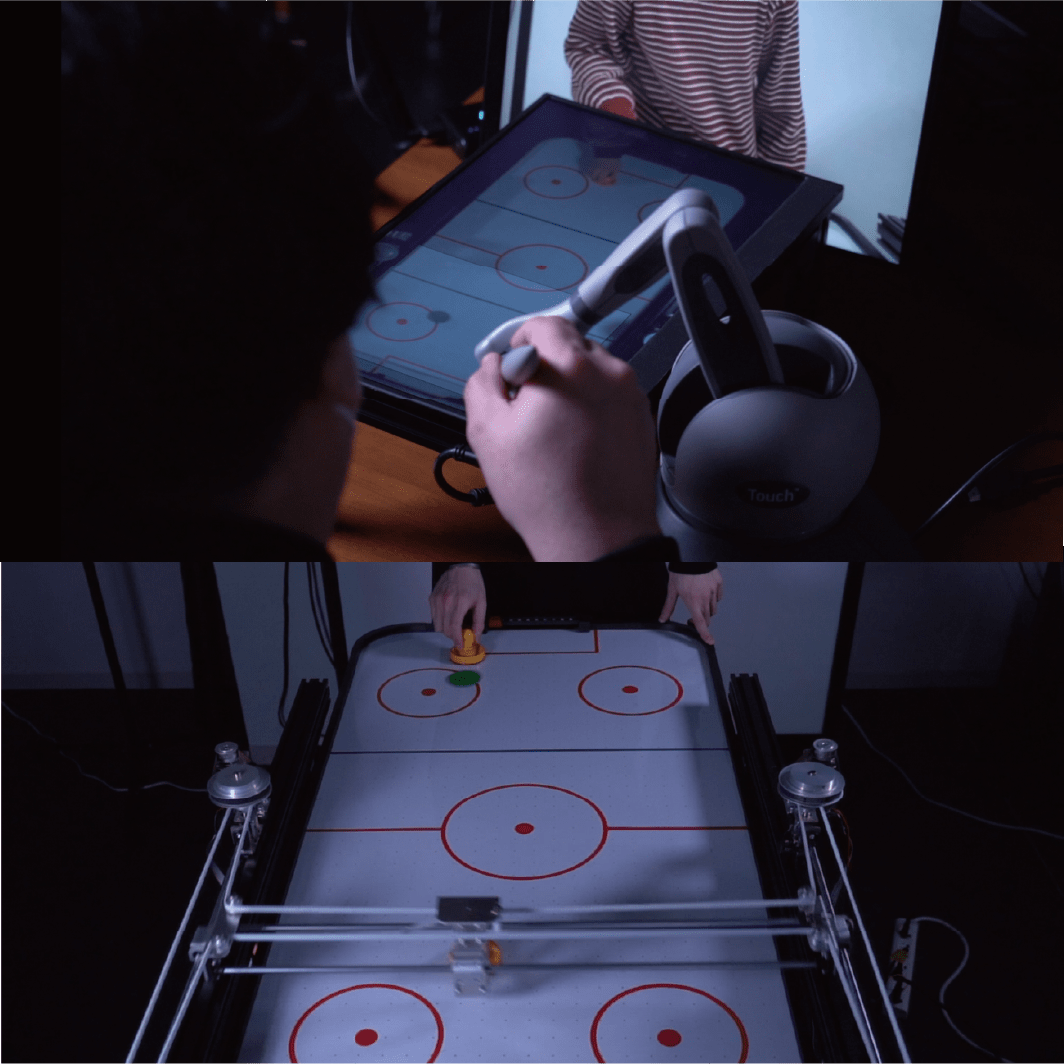

Behind the Game

When playing inter-personal sports games remotely, the time lag between user actions and feedback decreases the user’s performance and sense of agency. While computational assistance can improve performance, naive intervention independent of the context also compromises the user’s sense of agency. We propose a context-aware assistance method that retrieves both user performance and sense of agency, and we demonstrate the method using air hockey (a two-dimensional physical game) as a testbed. Our system includes a 2D plotter-like machine that controls the striker on half of the table surface, and a web application interface that enables manipulation of the striker from a remote location. Using our system, a remote player can play against a physical opponent from anywhere through a web browser. We designed the striker control assistance based on the context by computationally predicting the puck’s trajectory using a real-time captured video image. With this assistance, the remote player exhibits an improved performance without compromising their sense of agency, and both players can experience the excitement of the game.

publication:

-

Azumi Maekawa, Hiroto Saito, Narin Okazaki, Shunichi Kasahara, and Masahiko Inami. "Behind The Game: Implicit Spatio-Temporal Intervention in Inter-personal Remote Physical Interactions on Playing Air Hockey." ACM SIGGRAPH 2021 Emerging Technologies, ACM, 2021.

close

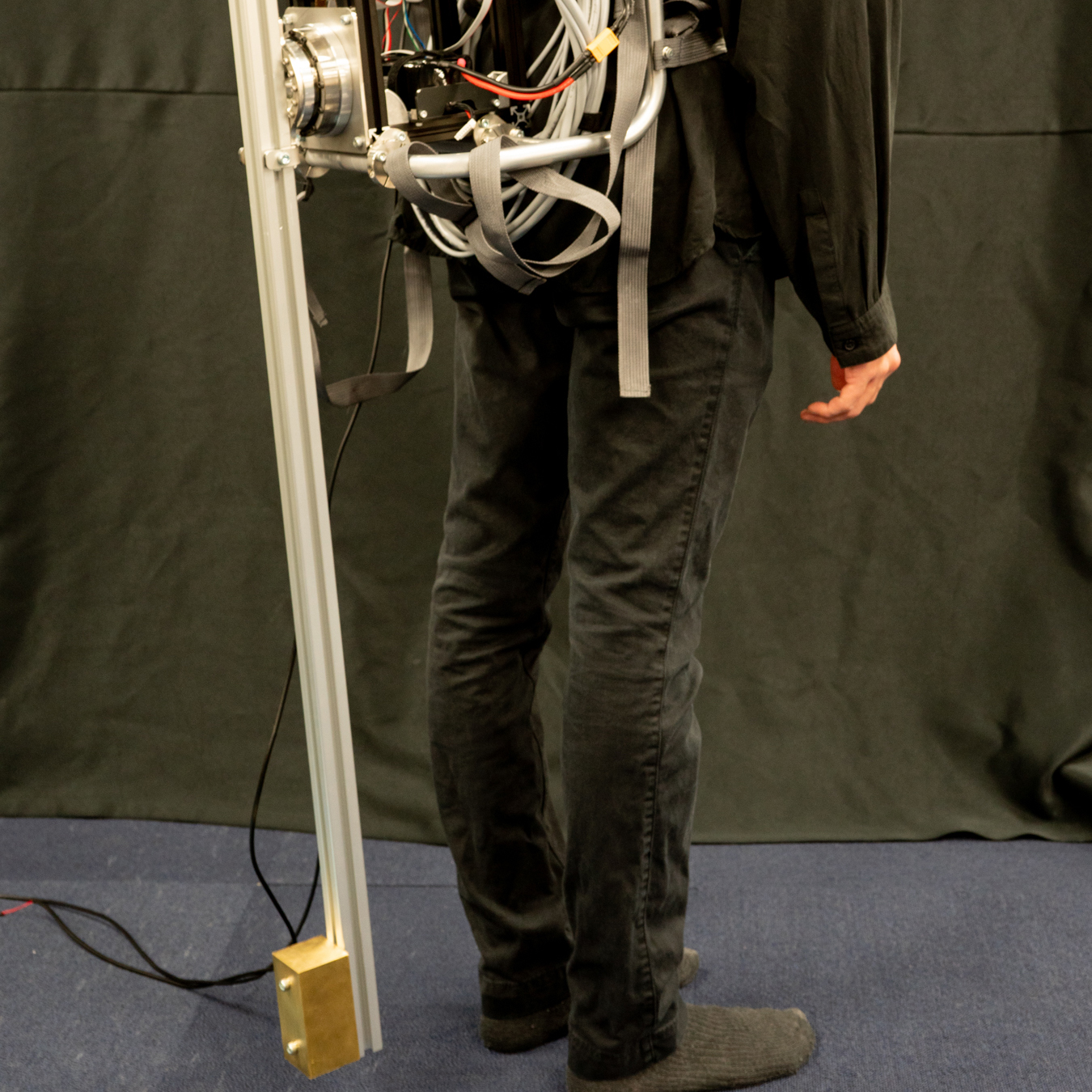

Tail-like Balancer

A reduced balance ability can lead to falls and critical injuries. To prevent falls, humans use reaction forces and torques generated by swinging their arms. In animals, we can find that a similar strategy is taken using tails. Inspired by these strategies, we propose an approach that utilizes a robotic appendage as a human balance supporter without assistance from environmental contact. As a proof of concept, we developed a wearable robotic appendage that has one actuated degree of freedom and rotates around the sagittal axis of the wearer. To validate the feasibility of our proposed approach, we conducted an evaluation experiment with human subjects. Controlling the robotic appendage we developed improved the subjects' balance ability and enabled the subject to withstand up to 22.8% larger impulse disturbances on average than in the fixed appendage condition.

publication:

-

Azumi Maekawa, Kei kawamura, and Masahiko Inami. "Dynamic Assistance for Human Balancing with Inertia of a Wearable Robotic Appendage." In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

close

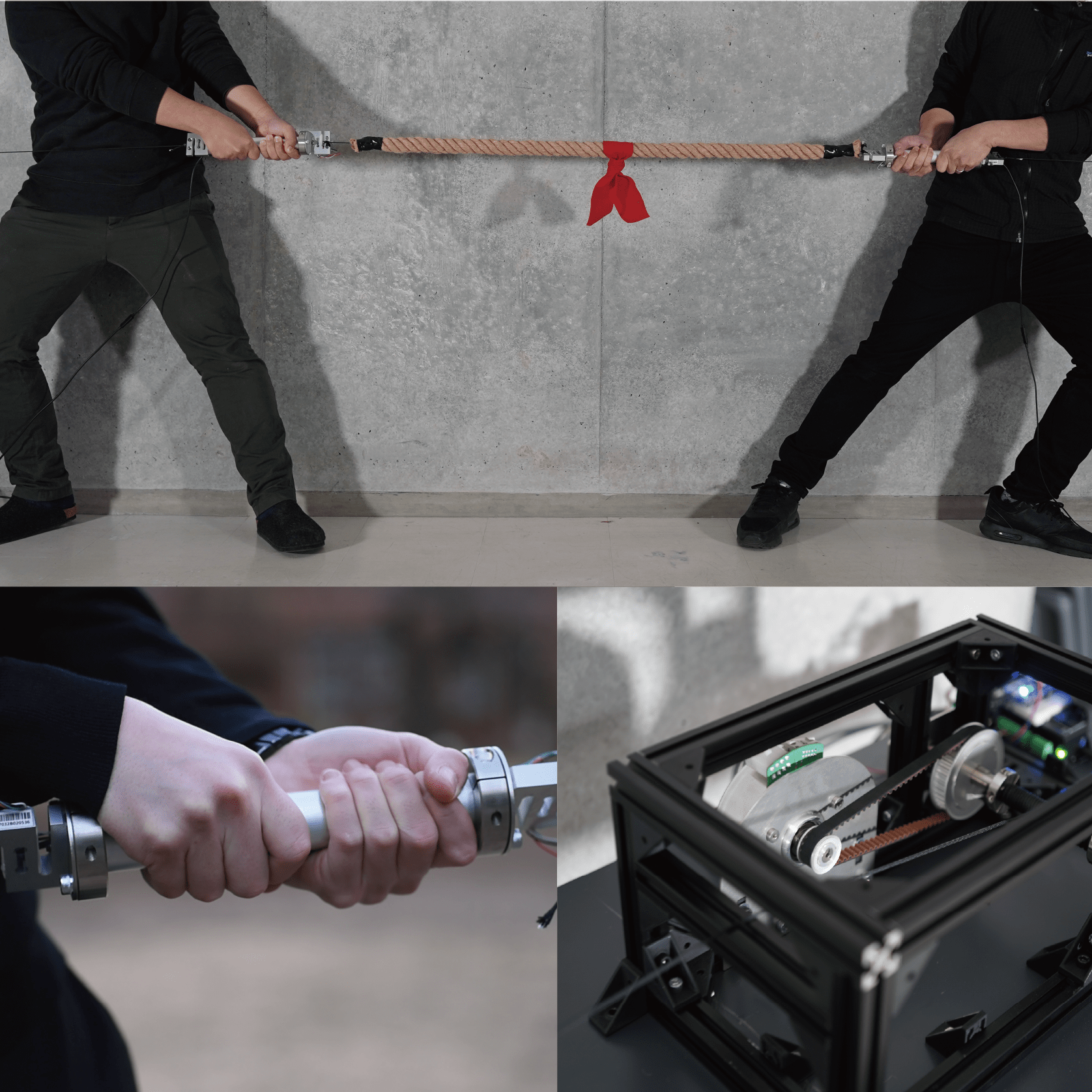

The Tight Game

Physical assistance can alleviate individual differences of abilities between players to create well-balanced inter-personal physical games. However, ‘explicit’ intervention can ruin the players’ sense of agency, and cause a loss of engagements in both the player and audience. We propose an implicit physical intervention system ”The Tight Game” for ‘Tug of War’ a one-dimensional physical game. Our system includes four force sensors connected to the rope and two hidden high torque motors, which provide realtime physical assistance. We designed the implicit physical assistance by leveraging human recognition of the external forces during physical actions. In The Tight Game, a pair of players engage in a tug of war, and believe that they are participating in a well balanced, tight game. In reality, however, an external system or person mediates the game, performing physical interventions without the players noticing.

publication:

- Azumi Maekawa, Shunichi Kasahara, Hiroto Saito, Daisuke Uriu, Ganesh Gowrishankar, and Masahiko Inami. "The Tight Game: Implicit Force Intervention in Inter-personal Physical Interactions on Playing Tug of War." ACM SIGGRAPH 2019 Emerging Technologies, ACM, 2020.

close

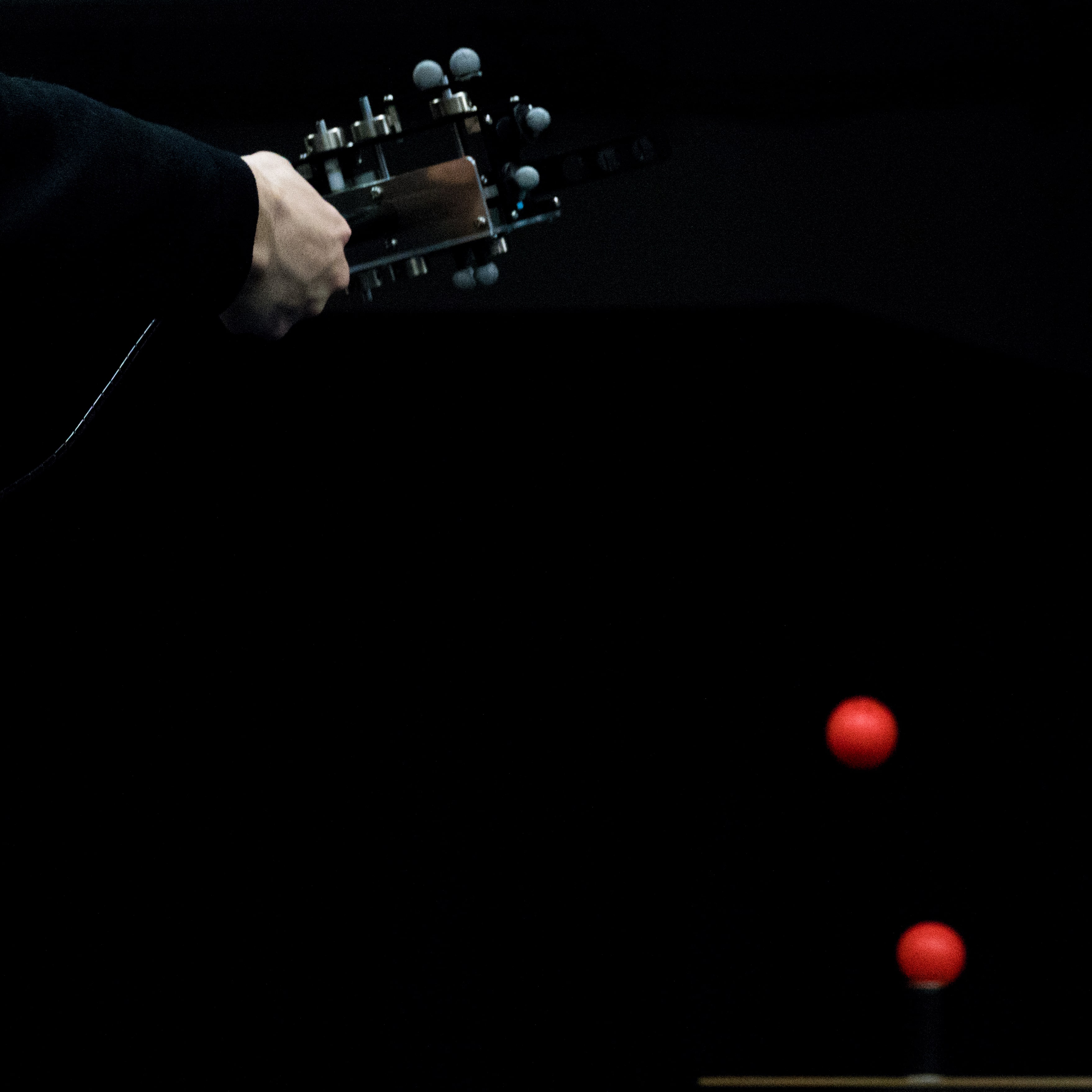

PickHits

Experiences of hitting targets cause a great feeling. We propose a system for generating this experience computationally. This system consists of external tracking cameras and a handheld device for holding and releasing a thrown object. As a proof-of-concept system, we developed the system based on two key elements: low-latency release device and constant model-based prediction. During the user’s throwing motion, the ballistic trajectory of the thrown object is predicted in real time, and when the trajectory coincides with the desired one, the object is released. We found that we can generate a computational hitting experience within a limited range space.

publication:

- Azumi Maekawa, Seito Matsubara, Sohei Wakisaka, Daisuke Uriu, Atsushi Hiyama, and Masahiko Inami. "Dynamic Motor Skill Synthesis with Human-Machine Mutual Actuation." In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, pp. 1-12.

- Azumi Maekawa, Seito Matsubara, Atsushi Hiyama, and Masahiko Inami. "PickHits: Hitting Experience Generation with Throwing Motion via a Handheld Mechanical Device." ACM SIGGRAPH 2019 Emerging Technologies. ACM, 2019.

credit:

Azumi Maekawa, Seito Matsubara

close

Stand

The hardware design of the robots usually reflects its purpose. For example, leg for walking, arm for reaching, hand for grasping, etc. The function of mechanical systems are explicitly defined by designers and the simple shape consisting of straight lines or circular arcs is reasonable for its clarified function. On the other hand, our real environment is full of diverse shapes. The complex shape has been formed by various factors, biological growth, aging, weathering and so on. Utilizing diverse objects to create robots, we might be able to see behaviors and motions we’ve never seen. We aim to find unpredictable motions we could not discover with simple shapes. In this work, as a primitive function of the robot, we focus on stand-up behavior. For the materials that bricolage the robot, we select tree branches as objects with diverse shapes. The robots' poses are generated aiming to maximize the height of the bodies. Robots with diverse body shapes change their pose by repeating trial and error in real time. Through the process of learning, this work portrays new functions and meanings given to commonplace objects.

exhibition:

-

Self Motto Satellite 2018 Autumn, knowledgscape

KANA KAWANISHI GALLERY, Tokyo, Japan, 2018

credit:

Azumi Maekawa

close

Photo by Yasushi Kato

Arial-Biped

There has been a desire to create robots that are capable of life-like motions and behaviors since ancient times. As a life-like motion, we focus on the bipedal walking, which is one of the key features of a legged robot. Movement of the legged robot is highly restricted by gravity. When its body shape is defined, the possible walking gait is almost determined accordingly. Therefore, it is difficult to realize arbitrary body design and dynamic walking motion with light steps like living things in a legged robot. Aerial-Biped is a prototype for exploring a new experience with a physical biped robot. In this work, using a quadrotor, we aim to separate the body shape design and motion design. By releasing the biped robot from gravity, it can relax the limitation of the robot's physical motion. The motion of Aerial-Biped is created in real time according to the movement of the quadrotor by the motion generator learned using deep reinforcement learning. We can observe various gaits emerge based on the successively changing quadrotor's movements.

publication:

- Azumi Maekawa, Ryuma Niiyama, and Shunji Yamanaka. "Pseudo-Locomotion Design with a Quadrotor-Assisted Biped Robot." In 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), pp. 2462-2466. IEEE, 2018.

- Azumi Maekawa, Ryuma Niiyama, and Shunji Yamanaka. "Aerial-biped: a new physical expression by the biped robot using a quadrotor." ACM SIGGRAPH 2018 Emerging Technologies. ACM, 2018.

exhibition:

-

Ars Electronica, "Out of the Box. The Midlife Crisis of the Digital Revolution"

PostCity, Linz, Austria, 2019

-

Parametric Move

UTokyo-IIS Prototyping & Design Laboratory, Tokyo, Japan, 2018

media:

- IEEE Spectrum, 13 Aug, 2018

- Reuters, 2 Oct, 2018

- ASCII, 17 Jul, 2018

- designboom, 14 Aug, 2018

- TechCrunch, 14 Aug, 2018

credit:

Azumi Maekawa, Shunji Yamanaka

close

publication

journal paper

-

Ryutaro Watanabe*, Azumi Maekawa*, Michiteru Kitazaki, Yasuaki Monnai, Masahiko Inami."Redirection Detection Thresholds for Avatar Manipulation with Different Body Parts." IEEE Transactions on Visualization and Computer Graphics (2025). (* These authors contributed equally.)

[pdf] [doi] -

Hiroto Saito*, Arata Horie*, Azumi Maekawa*, Seito Matsubara*, Sohei Wakisaka, Zendai Kashino, Shunichi Kasahara, Masahiko Inami. "Transparency in Human-Machine Mutual Action." Journal of Robotics and Mechatronics 33.5 (2021), pp. 987-1003. (* These authors contributed equally.)

[pdf] [doi]

international conference full paper

-

Artin Saberpour Abadian, Ata Otaran, Martin Schmitz, Marie Muehlhaus, Rishabh Dabral, Diogo Luvizon, Azumi Maekawa, Masahiko Inami, Christian Theobalt, Jürgen Steimle. "WRLKit: Computational Design of Personalized Wearable Robotic Limbs." In The 36th Annual ACM Symposium on User Interface Software and Technology (UIST) (2023)

[pdf] [doi] -

Azumi Maekawa, Hiroto Saito, Daisuke Uriu, Shunichi Kasahara, Masahiko Inami. "Machine-Mediated Teaming: Mixture of Human and Machine in Physical Gaming Experience." In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems

[pdf] [doi] -

Masahiko Inami, Daisuke Uriu, Zendai Kashino, Shigeo Yoshida, Hiroto Saito, Azumi Maekawa, Michiteru Kitazaki. "Cyborgs, Human Augmentation, Cybernetics, and JIZAI Body." The Augmented Humans (AHs) International Conference 2022

Honorable Mention Award [pdf] [doi] -

Riku Arakawa, Azumi Maekawa, Zendai Kashino, Masahiko Inami. "Hand with Sensing Sphere: Body-Centered Spatial Interactions with a Hand-Worn Spherical Camera." In Symposium on Spatial User Interaction, pp. 1-10.

[pdf] [doi] -

Azumi Maekawa, Kei kawamura, and Masahiko Inami. "Dynamic Assistance for Human Balancing with Inertia of a Wearable Robotic Appendage." In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

[pdf] [doi] -

Azumi Maekawa, Seito Matsubara, Sohei Wakisaka, Daisuke Uriu, Atsushi Hiyama, and Masahiko Inami. "Dynamic Motor Skill Synthesis with Human-Machine Mutual Actuation." In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, pp. 1-12. Honorable Mention Award [Top 5%]

[pdf] [doi] -

Azumi Maekawa, Shota Takahashi, MHD Yamen Saraiji, Sohei Wakisaka, Hiroyasu Iwata,and Masahiko Inami. "Naviarm: Augmenting the Learning of Motor Skills using a Backpack-type Robotic Arm System." In Proceedings of the 10th Augmented Human International Conference 2019, pp. 1-8.

[pdf] [doi] -

Azumi Maekawa, Ryuma Niiyama, and Shunji Yamanaka. "Pseudo-Locomotion Design with a Quadrotor-Assisted Biped Robot." In 2018 IEEE International Conference on Robotics and Biomimetics(ROBIO), pp. 2462-2466.

[pdf] [doi]

short paper

-

Daisuke Uriu, Shuta Iiyama, Taketsugu Okada, Takumi Handa, Azumi Maekawa, Masahiko Inami. "STAMPER: Human-machine Integrated Drumming" In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (CHI EA '23), Article 259, pp. 1-5.

[pdf] [doi]

demo & workshop (peer-reviewed)

-

Azumi Maekawa, Hiroto Saito, Narin Okazaki, Shunichi Kasahara, and Masahiko Inami. "Behind The Game: Implicit Spatio-Temporal Intervention in Inter-personal Remote Physical Interactions on Playing Air Hockey" ACM SIGGRAPH 2021 Emerging Technologies.

[pdf] [doi] -

Azumi Maekawa, Shunichi Kasahara, Hiroto Saito, Daisuke Uriu, Ganesh Gowrishankar, and Masahiko Inami. "The Tight Game: Implicit Force Intervention in Inter-personal Physical Interactions on Playing Tug of War" ACM SIGGRAPH 2020 Emerging Technologies.

[pdf] [doi] -

Azumi Maekawa, Seito Matsubara, Atsushi Hiyama, and Masahiko Inami. "PickHits: Hitting Experience Generation with Throwing Motion via a Handheld Mechanical Device." ACM SIGGRAPH 2019 Emerging Technologies.

[pdf] [doi] -

Azumi Maekawa, Shota Takahashi, MHD Yamen Saraiji, Sohei Wakisaka, Hiroyasu Iwata, and Masahiko Inami. "Demonstrating Naviarm: Augmenting the Learning of Motor Skills using a Backpack-type Robotic Arm System." In Proceedings of the 10th Augmented Human International Conference 2019, p. 48. Best Demo Award

[pdf] [doi] -

Azumi Maekawa, Ayaka Kume, Hironori Yoshida, Jun Hatori, Jason Naradowsky, and Shunta Saito. "Improvised Robotic Design with Found Objects." Workshop at Thirty-second Conference on Neural Information Processing Systems (NeurIPS), 2018 (Oral Presentation).

[pdf] -

Azumi Maekawa, Ryuma Niiyama, and Shunji Yamanaka. "Aerial-biped: a new physical expression by the biped robot using a quadrotor." ACM SIGGRAPH 2018 Emerging Technologies.

[pdf] [doi]

exhibition

-

Mugen Room by Air Max DN8

Paradox of the Mass

Ryota Kito + Azumi Maekawa

Sankei-Shimbun Koto Center, Tokyo, Japan

2025

-

未来の原画展

stand

Yamanaka Laboratory, Institute of Industrial Science, the University of Tokyo, Tokyo, Japan

2022.11.17 - 12.4

-

AkeruE

stand

Panasonic Center Tokyo, Tokyo, Japan

2021

-

The 23rd Japan Media Arts Festival

PickHits

Miraikan - The National Museum of Emerging Science and Innovation, Tokyo, Japan

2020

-

Ars Electronica, "Out of the Box. The Midlife Crisis of the Digital Revolution"

Aerial-Biped

PostCity, Linz, Austria

2019

-

Self Motto Satellite 2018 Autumn, knowledgscape

Stand

KANA KAWANISHI GALLERY, Tokyo, Japan

2018

-

Parametric Move

Aerial-Biped, Walk

UTokyo-IIS Prototyping & Design Laboratory, Tokyo, Japan

2018

-

iii Exhibition 19 "WYSWIG?"

How to Walk Branches

UTokyo, Tokyo, Japan

2017

-

iii Exhibition Extra 2017 "SUKIMANIAC"

Still Warm

UTokyo, Tokyo, Japan

2017

awards

-

25th Japan Media Arts Festival Art Division Jury Selections

第25回文化庁メディア芸術祭 アート部門 審査委員会推薦作品

-

The Augmented Humans (AHs) International Conference 2022 Honorable Mention Award

-

Recognized as INNO-β in the INNO-vation Program

総務省 異能vationプログラム 異能β認定

-

The 2021 INNO-vation Disruptive Challenge

総務省 異能vation 2021年度「破壊的な挑戦部門」挑戦者

-

The 2021 James Dyson Award National Finalists

James Dyson Award 国内準優秀賞

-

23rd Japan Media Arts Festival Entertainment Division New Face Award

第23回文化庁メディア芸術祭 エンターテインメント部門 新人賞

-

The ACM CHI 2020 Honorable Mention Award [top 5%]

-

YouFab Global Creative Award 2019, Special Prize

-

Augmented Human International Conference 2019 Best Demo Award

media

-

The University of Tokyo RCAST NEWS

"Azumi MAEKAWA won the 2021 James Dyson Award National finalists"

21 Sep, 2021

-

IEEE Spectrum Video Friday

“Video Friday: Android Printing Your weekly selection of awesome robot videos”

30 Jul, 2021

-

The University of Tokyo RCAST NEWS

“Azumi MAEKAWA's work was chosen as 23rd Japan Media Arts Festival’s Award-winning Works”

02 Jun, 2020

-

Arduino Blog

“Random sticks made to walk under Arduino control”

05 Jul, 2019

-

IEEE Spectrum

“Robots Made Out of Branches Use Deep Learning to Walk”

02 Jul, 2019

-

IEEE Spectrum

“Aerial-Biped Is a Quadrotor With Legs That Can Fly-Walk”

13 Aug, 2018

-

Reuters

“Quadcopter for a head helps robot walk tall”

2 Oct, 2018

-

美術手帳

Aug, 2018

-

ASCII

17 Jul, 2018

-

designboom

“aerial biped robot dances around using a quadrotor for balance and movement”

14 Aug, 2018

-

TechCrunch

“This bipedal robot has a flying head”

14 Aug, 2018

contact

-

azumimaekawa[at]gmail.com